New Analysis Suggests That Commercial Quantum Computers Are Kinda Slow

Credit to Author: Michael Byrne| Date: Mon, 18 Sep 2017 16:50:24 +0000

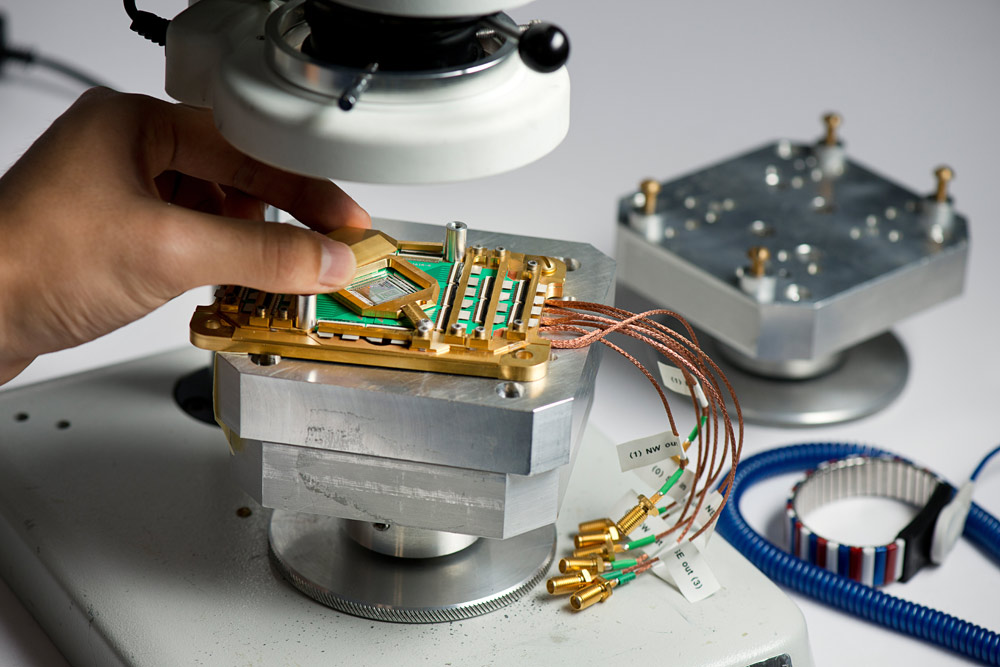

Depending on who you ask, quantum computing is a largely theoretical concept backed by tentative experimentation or it’s a technology that’s here right now and can be had for about $15 million from a Canadian company called D-Wave.

The question depends on a bit on how you ask it. Most everyone agrees that a general purpose quantum computer—one that’s like a superfast, supersecure version of the computers we’re all used to—is a ways away. What throws a kink into the quantum timeline is D-Wave’s 2000Q system, which is a kind of quantum computer that’s based on what’s known as quantum annealing. It’s not a general purpose quantum computer, but it does some quantum stuff. The question is of whether or not that quantum stuff really matters—that is, whether the D-Wave system is really faster than a classical computer.

A paper out this week in the Physical Review Letters suggests that quantum annealing is probably not faster than classical computing given real-world temperature constraints. Tameem Albash and colleagues from the University of Southern California, Los Angeles conducted a thermodynamic analysis on a model quantum annealer and found that such computers are critically limited by the temperature at which they operate. At current operating temperatures, which are very, very cold, it’s unlikely that such machines are reaching truly optimal solutions, the paper argues.

Read More: IBM Just Made a 17 Qubit Quantum Processor, Its Most Powerful One Yet

Quantum annealing is a computing paradigm that exploits the natural inclinations of a quantum system. Generally, it solves optimization problems. Given some big equation with a bunch of variables, how can we solve it such that the equation spits out the smallest values? Because particles are always jostling around randomly via quantum fluctuations, they’re able to try out a lot of different possible states really quickly. So, the idea is that these particles can be used to search for optimal values really quickly.

So, a quantum annealer is more a kind of a quantum optimizer or quantum solver than what we usually think of as a computer. But still: It fits the definition of quantum computation. What Albash and co. want to know is if that quantum computation actually counts for anything.

Their conclusion is that as problem sizes increase, quantum annealers run into a problem. Given a fixed temperature, the distance a particular particle can “jump” or fluctuate becomes smaller as more and more particles are added to the system. This means that the computer can explore a smaller range of solutions in the same amount of time. Consequently, the annealer becomes less efficient. This analysis aligns well with experimental results from the actual D-Wave computer, according to the current study. It also aligns with previous research that hasn’t been able to identify a “quantum speedup” in said machine(s).

This doesn’t necessarily mean doom for quantum annealing or D-Wave. For one thing, this is an ongoing argument. Previous negative results haven’t kept businesses like Lockheed Martin and Volkswagen from buying D-Wave quantum annealers. Quantum speedup or no, they’re fast computers, and it’s useful to apply quantum algorithms to problems. Albash and co. note that their findings don’t mean that an annealer couldn’t pull off the speedup, but that doing so will take increasingly difficult engineering solutions as the machines scale upward. At the very least, D-Wave skepticism remains valid.

Get six of our favorite Motherboard stories every day by signing up for our newsletter.