This Machine Kills CAPTCHAs

Credit to Author: Daniel Oberhaus| Date: Thu, 26 Oct 2017 18:58:05 +0000

As every domain of life becomes increasingly automated, humans are desperately seeking solace in the activities that differentiate us from our artificial doppelgangers. Although robots may be able to teach themselves how to master games like Go, at least they will never be able to write a book, make love, become a citizen of Saudi Arabia, or appreciate the natural beauty of a landscape, right?

Wrong.

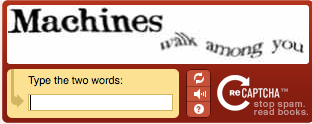

It is with a heavy heart that I must tell you that an artificial intelligence has finally cracked a widely used tool that was literally made to differentiate humans from robots: the CAPTCHA.

CAPTCHAs are the annoying puzzles that might ask you to rewrite a piece of distorted text or click on all the automobiles in a photograph to log on to sites like PayPal. According to research published today in Science, a new type of AI was able to solve certain types of CAPTCHA with up to 66.6 percent accuracy. To put this in perspective, humans can solve the same type of CAPTCHA with about 87 percent accuracy due to multiple interpretations of some examples and a CAPTCHA is considered broken if a bot can pass it 1 percent of the time.

Completely Automated Public Turing tests to tell Computers and Humans Apart, aka CAPTCHAs, were invented in the late 1990s as a way to prevent fraud and spam by bots. The basic idea was to create a puzzle that would be easy for a human to decipher, but difficult for a computer.

Even though computers are really good at doing math, don’t have much difficulty reading plain text, and are getting increasingly good at picking out objects in moving and still photos, when these images are slightly distorted it throws them through a loop. The reason for this is that although a computer can be trained to recognize the letter ‘M’ in every font imaginable, it can’t generalize from this to recognize an ‘M’ in the nearly infinite ways that the letter might be distorted from the original font.

The ability to learn and generalize from a small set of examples is one of the things that sets our big monkey brains apart from computers and allows us to solve CAPTCHAs with ease. Or so it was thought. As detailed today in Science, researchers at the Zuckerberg and Bezos funded AI company Vicarious have developed a probabilistic machine-vision algorithm that was able to understand and pass a CAPTCHA test because it could generalize from a small set of examples.

Other researchers have trained deep-learning algorithms to break a CAPTCHA, but these often required millions of labeled examples to train the algorithm and only worked on one particular style. The Vicarious AI, on the other hand, can crack a variety of textual CAPTCHA styles and do so with far more efficiency.

Drawing upon insights from “experimental neuroscience data,” the Vicarious researchers made a probabilistic algorithm called a Recursive Cortical Network that takes a CAPTCHA and models it as a collection of shapes and appearances (such as the smoothness of the letters’ surfaces) based on a handful of training images of clean text.

Other neural networks are able to recognize words and letters in a CAPTCHA after those words and letters have been parsed and labeled for it by a human over millions of training examples. The Recursive Cortical Network, on the other hand, works closer to the way an actual human brain responds to visual cues. The RCN first generates models based on letter contours and appearances from a handful of undistorted example letters (in this case, derived from the Georgia font) and then uses it to probabilistically determine which letter it is ‘looking’ at in a distorted CAPTCHA phrase.

Read More: Google’s DeepMind is Teaching AI How to Think Like a Human

As the researchers wrote in the paper, a CAPTCHA is considered broken if a machine can solve it at a rate above 1 percent. The Vicarious algorithm was presented with a variety of different CAPTCHA styles and had remarkably high success rates in cracking them. It solved reCAPTCHAs with 66.6 percent accuracy, BotDetect at 64.4 percent accuracy, Yahoo at 57.4 percent accuracy, and Paypal at 57.1 percent accuracy and all with “very little training data.” For reCAPTCHA, for example, the algorithm was trained on just five non-distorted examples per character.

Other state of the art neural networks would require training sets that are 50,000 times larger and based on actual CAPTCHA strings, rather than just clean characters. As a benchmark, the researchers used a different neural net model to achieve an 89.9 percent accuracy rate—but it required 2.3 million training images to get there and the accuracy dropped severely when small changes were made to the CAPTCHA, such as slightly increasing the spacing between the letters.

The main improvement with the new algorithm is that rather than training a neural network to crack specific types of CAPTCHAs by training it with millions of examples of that CAPTCHA, the Vicarious researchers created a neural net that would create character models based on shape and appearance that it could then use to ‘understand’ a captcha.

The researchers’ success at creating a neural net that can solve a wide variety of CAPTCHAs means humans are going to have to start looking for more robust bot-training mechanisms. One solution pioneered by Google is audio reCAPTCHAs, which are just like text CAPTCHAs but with sound. Recently a team of computer scientists at the University of Maryland created unCaptcha, a freely available algorithm that can solve audio reCAPTCHAs in a few seconds with over 85 percent accuracy.

Although CAPTCHAs will still likely be used as a first line of defense on the internet for the foreseeable future, they can hardly be considered a guarantee that a website visitor is actually human anymore. Before long, we may all be subjected to a Voight-Kampff test from Blade Runner just to log on.