The Bots That Are Changing Politics

Credit to Author: Brian Anderson| Date: Thu, 02 Nov 2017 14:30:00 +0000

Editor’s note: This essay is drawn from discussions and writings around a June 2017 convening organized and led by Samuel Woolley, Research Director of the new DigIntel Lab at the Institute for the Future, alongside fellow bot experts* Renee DiResta, John Little, Jonathon Morgan, Lisa Maria Neudert, and Ben Nimmo. The symposium was held at Jigsaw, the Google / Alphabet think-tank and technology incubator. Disclosure: Jigsaw provided space, funded Woolley as a (former) research fellow, and covered travel costs.

Bots and their cousins—botnets, bot armies, sockpuppets, fake accounts, sybils, automated trolls, influence networks—are a dominant new force in public discourse.

You may have heard that bots can be used to threaten activists, swing elections, and even engage in conversation with the President. Bots are the hip new media; Silicon Valley has marketed the chatbot as the next technological step after the app. Donald Trump himself has said he wouldn’t have won last November without Twitter, where, researchers found, bots massively amplified his support on the platform.

Scholars have argued that nearly 50 million accounts on Twitter are actually automatically run by bot software. On Facebook, social bots—accounts run by automated software that mimic real users or work to communicate particular information streams—can be used to automate group pages and spread political advertisements. Recent public revelations from Facebook reveal that a Russian “troll farm” with close ties to the Kremlin spent around $100,000 on ads ahead of the 2016 US election and produced thousands of organic posts that spread across Facebook and Instagram. The same firm, the Internet Research Agency, has been known to make widespread use of bots in its attempts to manipulate public opinion over social media.

Despite the fervor over the political usage of bots during several recent global elections, such as last year’s US elections, the term “bot,” like “fake news,” remains ambiguous. It’s now sometimes used to refer to any social media persona producing content with which others do not agree.

What’s a Bot and What’s It Do?

One way to define bots: they are software that imitate human behavior. This can take many forms, such as chat bots that facilitate customer support or act as personal assistants. A political bot might be programmed to leave supportive comments on a politician’s Facebook page, target journalists with a flood of angry tweets, or engage with a post to artificially inflate its popularity.

The difference between humans and bots is that while an active human social media user might find time to post once or twice a day to hundreds of followers, bots don’t need to work, look after the kids, or sleep. Bots can be programmed to have a single mission: post as much as possible without getting caught. This makes bots a powerful tool for those who wish to shape public opinion by dominating, or guiding, conversation.

Each can be programmed with its own unique identity; some even steal and build upon the identities of real users. Some bots are designed to fight for social good and clearly identify themselves as bots rather than pretending to be human. Covert political bots, meanwhile, are designed to do the opposite: to trick and deceive, to convincingly appear to be real people with actual political ideas. Carefully manufactured and managed identities, often shaped to mirror that of their target audience, are a hallmark of such clandestine bot campaigns.

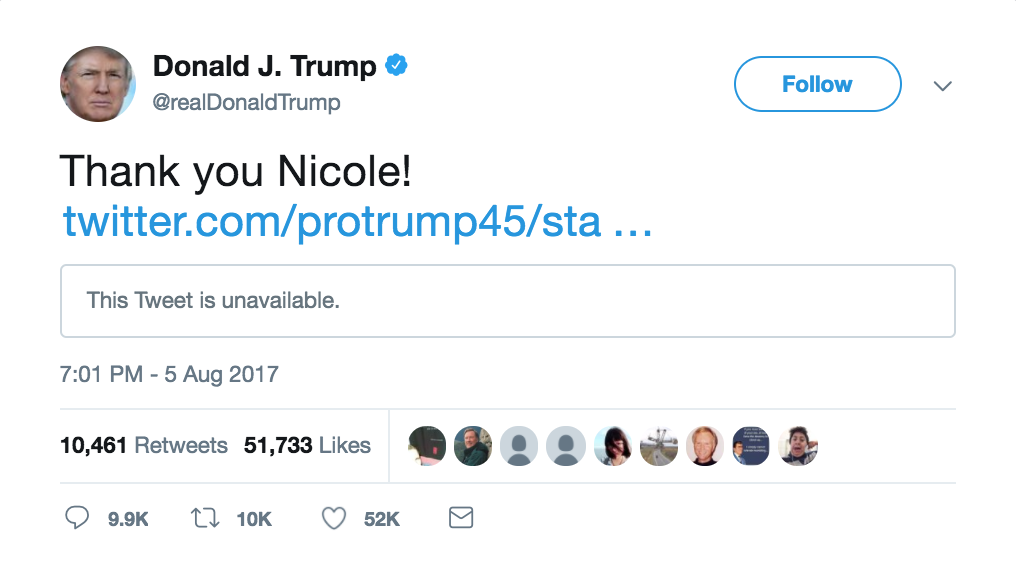

Consider the now infamous case of Trump’s August 2017 interaction with a Twitter user by the name of Nicole Mincey. Trump, in front of his (at the time) 35 million followers, thanked Mincey for tweeting that he was “working hard for the American people.” (At the time of Nicole’s tweet, Trump was on the golf course.)

Mincey, who had nearly 150,000 Twitter followers, seemed to be an African American conservative and Trump supporter. On closer inspection, however, it became clear that something wasn’t quite right; most jumped to the conclusion that this was a political bot account. It turned out that Mincey’s photo was taken from a stock-photo company. Further investigation revealed that the account was “a fictionalized character being used by the marketing arm of a fly-by-night e-commerce operation” selling Trump-related merchandise. The account has since been suspended.

Was this a political bot? Journalists found that the robust following behind the Mincey account was itself made up of a huge social botnet—basically a group of bots built to follow one another so as to evade the Twitter algorithm that detects problematic bot activity while also giving the illusion of legitimacy. The account also tweeted at a rate beyond the capacity of a normal human user.

Nicole Mincey, however, turned out to be a real person who had indeed done work with the e-commerce operation in question.

The line between bot and human is sometimes blurred. Some accounts are entirely automated, spamming Twitter, Facebook, and other social media with the same messages at high volume. Others are more subtle, posting unique, carefully-crafted messages, and even interacting with other users on their social media platform of choice. These more nuanced accounts are known as personas, cyborgs, or sockpuppets. They aren’t necessarily fully automated, but are nonetheless “fake” people operated by an individual or group who want to mask their true identity.

Some governments operate “sockpuppet” accounts at considerable scale. Take, for example, the St. Petersburg-based Internet Research Agency, which employed dozens of people at a reported cost of $2.3 million to spread its memes on Facebook, YouTube, and elsewhere. Or China’s “50 Cent Army,” which uses a massive number of persona accounts to flood the internet with comments meant to distract people from crises or to praise the government. The former country makes heavy use of bots in attacking opponents of the Kremlin, while the latter is known to use human labor to run shell accounts. Both types of accounts, however, commonly get referred to as bots.

These information operations often rely on different types of bots to execute a complex strategy. Some bots are used to unite and enhance the credibility of real users who knowingly or even unwittingly publish content that supports the operation’s objectives. Other bots are used to disrupt the other side, such as posting incessantly negative comments on opposition activists’ profiles. Many bots simply introduce chaos and misinformation to poison discourse. Piercing this wall of “noise” is increasingly beyond the reach of all but the most sophisticated social media users.

The most effective networks of bots manipulating public discussion on social media operate a combination of automated and cyborg accounts. Bots in a network, or botnet, deploy different but complementary tactics toward achieving their goal:

- Sockpuppets (part-human/part-bot or, simply, cyborgs) initiate the conversation, seeding new ideas and driving discussion in online communities.

- The ideas are then amplified by as many as tens of thousands of automated accounts that we call “amplifier bots,” repurposing, retweeting, and republishing the same language.

- “Approval bots” engage with specific tweets or comments, “Liking,” “retweeting,” or “replying” to enhance credibility, and give legitimacy to an idea.

- In hotly contested topic areas, bots are often used to harass and attack individuals and organizations in an attempt to push them out of the conversation.

Fully automated or not, relatable human identities, coupled with superhuman posting abilities, are the key to the success of political bots. At a glance, they look like normal social media users, and most users are fooled because most people don’t have time to scrutinize bot identities. Neither do most users, nor even researchers, understand the ways that huge numbers of bots might affect trends or news content—including those associated with US politics—on Twitter, Facebook, or Google.

And it’s still not clear how public opinion on candidates or divisive policy issues has the potential to alter public opinion. When thousands, or tens of thousands of sockpuppet and automated accounts are operated by a single user or group, they can create the impression that many thousands of people believe the same thing. In the same way that mass media like television and radio were once used to manufacture consent, bots can be used to manufacture social consensus.

In the most successful bot-aided campaigns, real social media users are influenced to the point they willingly participate in sharing false or inflammatory content with their networks. This often leads to mainstream media coverage, which further elevates the campaign even when the coverage is intended to debunk false or misleading information.

Read more: The Same Twitter Bots That Helped Trump Tried to Sink Macron

Data for Democracy researchers Kris Shaffer, Ben Starling, and C.E. Carey noted this phenomenon after French president Emmanuel Macron’s campaign emails were hacked just days before the French elections. A group of users organized in the “/pol” channel in the anonymous internet community 4chan. Shaffer, Starling, and Carey tracked how these catalyst users designed a campaign intended to disseminate the hacked Macron campaign data to a more mainstream audience on Twitter.

Once the content had been posted to Twitter, it was quickly amplified by high-follower accounts like @DisobedientMedia and @JackPosobiec, who, with over 230,000 followers, function as what the researchers call “signal boosters.” Ben Nimmo, information defense fellow at the Atlantic Council (and a co-author on this article), has outlined the same roles broadly as shepherds and obedient sheepdogs.

However they are labeled, these roles are consistent from campaign to campaign: someone crafts a message and strategy, and automated accounts and fake personae are used to make it trend. Once trending, a meme easily attracts attention from more social media users until it spreads organically into the mainstream and the media. Recent research shows that bots can also be built to target particular human users who might be more likely to engage with and share a given piece of propaganda. The authors argue that automated agents can be used to target users with particular private views or network positions in order to spread, or curtail, fake news.

The Future of Bots

Even as companies like Twitter and Facebook acknowledge the bot problem, and race to remove bots from their platforms, the bots are becoming increasingly sophisticated and harder to detect.

A wave of Silicon Valley startups, along with established tech giants like Amazon and Google, are clamoring to produce “conversational agents” (aka chat bots), that can have a believable conversation with a human. In most cases these companies have nothing to do with propaganda, and simply want to make it easier for you to chat with applications that help you book a flight, or order groceries.

Reality, at least in the digital space, will increasingly be up for grabs.

But the same technology that allows machines to understand and communicate in human language can and will be used to make social media bots even more believable. Instead of short, simple messages blasted out to whoever will listen, these bots will carry on coherent conversations with other users, with infinite patience to debate, and persuade. They can also be programmed collect to collect, store, and use information gleaned from these conversations in later communication.

Text communication is just the beginning. University of Washington researchers recently created an eerily believable fake video of Barack Obama giving a speech, proving that it is possible to generate audio and video that passes as human. Similarly, it is increasingly possible to create audio and video of entirely fake events. The technology that allows bots to engage in credible dialogue will eventually be married with the ability to produce audio and video. Humans already have trouble recognizing relatively naive text bots masquerading as real users; these advances in machine learning will give propagandists even more power to manipulate the public. Reality, at least in the digital space, will increasingly be up for grabs. This uncertainty is, in and of itself, a victory for the propagandist. Civil society, however, can and must work to generate sensible responses to this problem.

Until now, computational propaganda has been limited largely to politics. Operating the thousands of sockpuppets and tens or hundreds of thousands of bots necessary to run a large-scale information operation is costly. However, as with every new technology, the cost of operating botnets is decreasing. The software used to wield this type of automation is more accessible than ever before.

Increasingly, bots are being used to target issue-based groups, brands, and corporations. Hollywood films like last year’s Ghostbusters reboot and celebrities like Shia Labeouf have been targeted by troll armies organized on Reddit and 4chan. It probably can’t be long before attackers running a group of sockpuppet accounts in the US seeds fake news about a corporation—say, a massive data breach—and amplifies that messaging with 10,000 bots until the story is trending on Twitter, and waits until the story is picked up by mainstream media before making a large stock purchase, or simply claiming victory.

Similar attacks have actually occurred in Poland, where bots have been used to spread propaganda on behalf of pharmaceutical and natural resource companies.

How to Respond to the (Ro)bots

There is a growing and urgent need for strategies to stop bot-enabled influence operations from shaping our politics and culture.

If political bots can be deployed to poison public discourse, they can also be deployed to defend it. Organizations being targeted by a malicious attack could launch “counter bots” to distract the attackers, and disseminate supportive messages in Twitter replies and Facebook comments. Such “cognitive denial of service” tactics by good bots might reclaim the space that digital attackers use to manufacture consensus. Bots could also be used as a social prosthesis to raise awareness about social issues or to connect activist groups that might otherwise not connect.

This approach is not without risk. Using bots to tag fake news, for instance, could backfire or reinforce people’s beliefs. Many have rightly suggested that introducing good bots to compete with bad bots reduces public discourse to an arms race. Rather than re-establishing a space for democratic deliberation on social media, counter-bot strategies risk further polluting the discussion. But the unfortunate reality is that the arms race has already started, and only one side is really armed.

Watch more Motherboard:

Regardless, this “bot vs bot” approach is a short-term solution. Ultimately, social media platforms provide the scaffolding for bots to do their work. This puts the social networking companies in the best position to address the problem at its root. However, those very companies have displayed minimal initiative to date in targeting social bots because of financial incentives—bot activity improves the metrics marketers use to justify spending money on social media advertising.

Though Facebook is sharing what it knows about Russian-bought advertisements with the US Congress, the social giant has shared very little about how bots or cyborgs might be used to run group pages on the site, the areas where people on the platform actually actively engage with users outside of their immediate social networks.

Twitter’s public relations and policy teams have consistently attacked external research into the “impact of bots on Twitter” as “often inaccurate and methodologically flawed.” Yet the company does not effectively deploy its own researchers to collaborate with third-party scientists in efforts to address their colossal bot problem.

Google, on the other hand, has mostly managed to stay out of public conversations about how the use of bots on its various platforms might affect politics or public knowledge. This despite the fact that Columbia professor Jonathan Albright has found that Youtube, a Google company, “is being harnessed as a powerful agenda booster for certain political voices.”

In the platforms’ defense, they face a confounding problem when handling the cyborg and sockpuppet accounts: real people operate them, and this anonymous activity doesn’t always violate the platforms’ respective Terms of Service. However, there are some common sense steps social media companies can take to address the problem:

- Label bots as automated accounts. This is technically achievable and would increase transparency in online political conversation.

- Share data for network analysis with researchers. This would enlighten not only the operators of bots and how they are connected, but also the dynamics of how bot messages spread throughout a social network.

- Enforce algorithmic “shadow bans,” in which accounts are not removed from the platform but their activity is hidden from other users. Such bans could silently minimize the reach of suspected accounts, though they will not address the core issue of authenticity.

Despite the challenges of identifying specific methods to resolve the problem, one thing is clear: If platforms do not address this issue, we will continue to see decreasing trust in online conversations and the degradation of public discourse, as online spaces become increasingly unusable.

*About the authors:

Renee DiResta (@noupsidedown) Founding member of Haven, 2017 Presidential Leadership Scholar.

John Little (@blogsofwar) Founder, Blogs of War, Fellow, Institute for the Future

Jonathon Morgan (@jonathanmorgan) Founder and CEO, NewKnowledge.io

Lisa Maria Neudert (@lmneudert) Researcher, Computational Propaganda Project, University of Oxford

Ben Nimmo (@benimmo) Information defense fellow, the Digital Forensic Research Lab, Future Europe Initiative, Atlantic Council

Samuel Woolley (@samuelwoolley) Research director, DigIntel Lab, Institute for the Future, researcher, Computational Propaganda Project, University of Oxford

Get six of our favorite Motherboard stories every day by signing up for our newsletter.