Neural networks reveal the images used to train them | Kaspersky official blog

Credit to Author: Enoch Root| Date: Mon, 24 Apr 2023 14:43:02 +0000

Your (neural) networks are leaking

Researchers at universities in the U.S. and Switzerland, in collaboration with Google and DeepMind, have published a paper showing how data can leak from image-generation systems that use the machine-learning algorithms DALL-E, Imagen or Stable Diffusion. All of them work the same way on the user side: you type in a specific text query — for example, “an armchair in the shape of an avocado” — and get a generated image in return.

Image generated by the Dall-E neural network. Source.

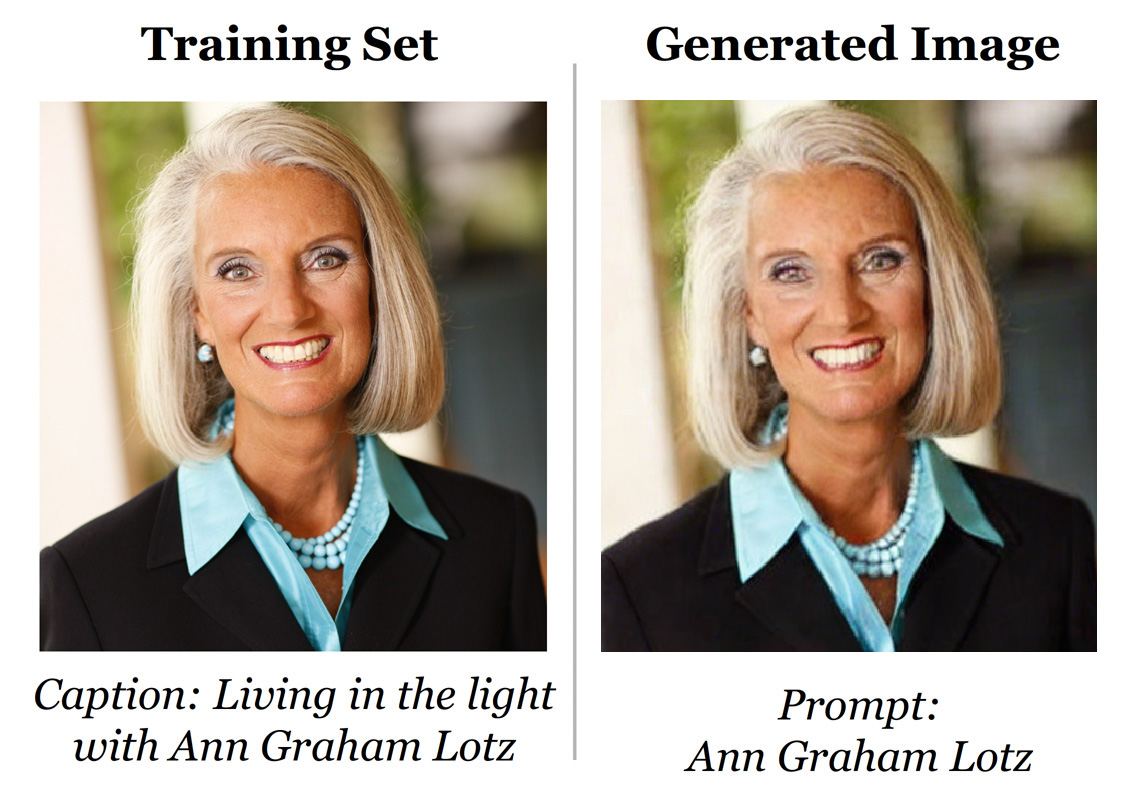

All these systems are trained on a vast number (tens or hundreds of thousands) of images with pre-prepared descriptions. The idea behind such neural networks is that, by consuming a huge amount of training data, they can create new, unique images. However, the main takeaway of the new study is that these images are not always so unique. In some cases it’s possible to force the neural network to reproduce almost exactly an original image previously used for training. And that means that neural networks can unwittingly reveal private information.

Image generated by the Stable Diffusion neural network (right) and the original image from the training set (left). Source.

More data for the “data god”

The output of a machine-learning system in response to a query can seem like magic to a non-specialist: “woah – it’s like an all-knowing robot!”! But there’s no magic really…

All neural networks work more or less in the same way: an algorithm is created that’s trained on a data set — for example a series of pictures of cats and dogs — with a description of what exactly is depicted in each image. After the training stage, the algorithm is shown a new image and asked to work out whether it’s a cat or a dog. From these humble beginnings, the developers of such systems moved on to a more complex scenario: the algorithm trained on lots of pictures of cats creates an image of a pet that never existed on demand. Such experiments are carried out not only with images, but also with text, video and even voice: we’ve already written about the problem of deepfakes (whereby digitally altered videos of (mostly) politicians or celebrities seem to say stuff they never actually did).

For all neural networks, the starting point is a set of training data: neural networks cannot invent new entities from nothing. To create an image of a cat, the algorithm must study thousands of real photographs or drawings of these animals. There are plenty of arguments for keeping these data sets confidential. Some of them are in the public domain; other data sets are the intellectual property of the developer company that invested considerable time and effort into creating them in the hope of achieving a competitive advantage. Still others, by definition, constitute sensitive information. For example, experiments are underway to use neural networks to diagnose diseases based on X-rays and other medical scans. This means that the algorithmic training data contains the actual health data of real people, which, for obvious reasons, must not fall into the wrong hands.

Diffuse it

Although machine-learning algorithms look the same to the outsider, they are in fact different. In their paper, the researchers pay special attention to machine-learning diffusion models. They work like this: the training data (again images of people, cars, houses, etc.) is distorted by adding noise. And the neural network is then trained to restore such images to their original state. This method makes it possible to generate images of decent quality, but a potential drawback (in comparison with algorithms in generative adversarial networks, for example) is their greater tendency to leak data.

The original data can be extracted from them in at least three different ways: First, using specific queries, you can force the neural network to output — not something unique, generated based on thousands of pictures — but a specific source image. Second, the original image can be reconstructed even if only a part of it is available. Third, it’s possible to simply establish whether or not a particular image is contained within the training data.

Very often, neural networks are… lazy, and instead of a new image, they produce something from the training set if it contains multiple duplicates of the same picture. Besides the above example with the Ann Graham Lotz photo, the study gives quite a few other similar results:

If an image is duplicated in the training set more than a hundred times, there’s a very high chance of its leaking in its near-original form. However, the researchers demonstrated ways to retrieve training images that only appeared once in the original set. This method is far less efficient: out of five hundred tested images, the algorithm randomly recreated only three of them. The most artistic method of attacking a neural network involves recreating a source image using just a fragment of it as input.

The researchers asked the neural network to complete the picture, after having deleted part of it. Doing this can be used to determine fairly accurately whether a particular image was in the training set. If it was, the machine-learning algorithm generated an almost exact copy of the original photo or drawing. Source.

At this point, let’s divert our attention to the issue of neural networks and copyright.

Who stole from whom?

In January 2023, three artists sued the creators of image-generating services that used machine-learning algorithms. They claimed (justifiably) that the developers of the neural networks had trained them on images collected online without any respect for copyright. A neural network can indeed copy the style of a particular artist, and thus deprive them of income. The paper hints that in some cases algorithms can, for various reasons, engage in outright plagiarism, generating drawings, photographs and other images that are almost identical to the work of real people.

The study makes recommendations for strengthening the privacy of the original training set:

- Get rid of duplicates.

- Reprocess training images, for example by adding noise or changing the brightness; this makes data leakage less likely.

- Test the algorithm with special training images, then check that it doesn’t inadvertently reproduce them accurately.

What next?

The ethics and legality of generative art certainly make for an interesting debate — one in which a balance must be sought between artists and the developers of the technology. On the one hand, copyright must be respected. On the other, is computer art so different from human? In both cases, the creators draw inspiration from the works of colleagues and competitors.

But let’s get back down to earth and talk about security. The paper provides a specific set of facts about only one machine-learning model. Extending the concept to all similar algorithms, we arrive at an interesting situation. It’s not hard to imagine a scenario whereby a smart assistant of a mobile operator hands out sensitive corporate information in response to a user query: after all, it was in the training data. Or, for example, a cunning query tricks a public neural network into generating a copy of someone’s passport. The researchers stress that such problems remain theoretical for the time being.

But other problems are already with us. As we speak, the text-generating neural network ChatGPT is being used to write real malicious code that (sometimes) works. And GitHub Copilot is helping programmers write code using a huge amount of open-source software as input. And the tool doesn’t always respect the copyright and privacy of the authors whose code ended up in the sprawling set of training data. As neural networks evolve, so too will the attacks on them — with consequences that no one yet fully understands.