Researcher: ‘We Should Be Worried’ This Computer Thought a Turtle Was a Gun

Credit to Author: Kate Lunau| Date: Thu, 02 Nov 2017 16:09:28 +0000

We’re putting more trust in artificial intelligence to take care of our fleshy bodies in risky situations than ever before. Self-driving cars, airport luggage scans, security cameras with facial recognition, and various medical devices all employ AI systems, and more will in the future. But neural networks are easy to fool, if you fiddle with the algorithms just a tiny bit.

By manipulating a few pixels in an image, you can trick a neural network—even one that’s great at recognizing cats, and is trained on hundreds or thousands of images of felines—into thinking that it’s looking at something completely different.

Fooling an AI with a couple of pixels is called an adversarial example, and potential attackers can use them to trick or confuse an AI. For the first time, researchers have tricked an AI into thinking a real-world object—in this case, a 3D printed turtle—is a rifle.

The concept of an adversarial object attack in the real world was only ever considered theoretical by researchers in the field. Now, LabSix, an AI research group at the Massachusetts Institute of Technology, has demonstrated the first example of a real-world 3D object becoming “adversarial” at any angle. The paper that describes the work was authored by Anish Athalye, Logan Engstrom, Kevin Kwok, and Andrew Ilyas.

Read More: How Cellphone Camera Images Can Fool Machine Vision

“Just because other people haven’t been able to do it, doesn’t mean that adversarial examples can’t be robust,” Athalye told me in a phone conversation. “This conclusively demonstrates that yes, adversarial examples are a real concern that could affect real systems, and we need to figure out how to defend against them. This is something we should be worried about.”

He gave the example of self-driving cars as perhaps the most immediate threat: Someone with malicious intent and knowledge of how the car sees road signs could change a 15 mph speed limit sign into a 75 mph one, or a fire hydrant into a pedestrian. Airport screening is another concern: A carefully-configured suitcase could trick an AI-powered scanner into thinking it’s safe.

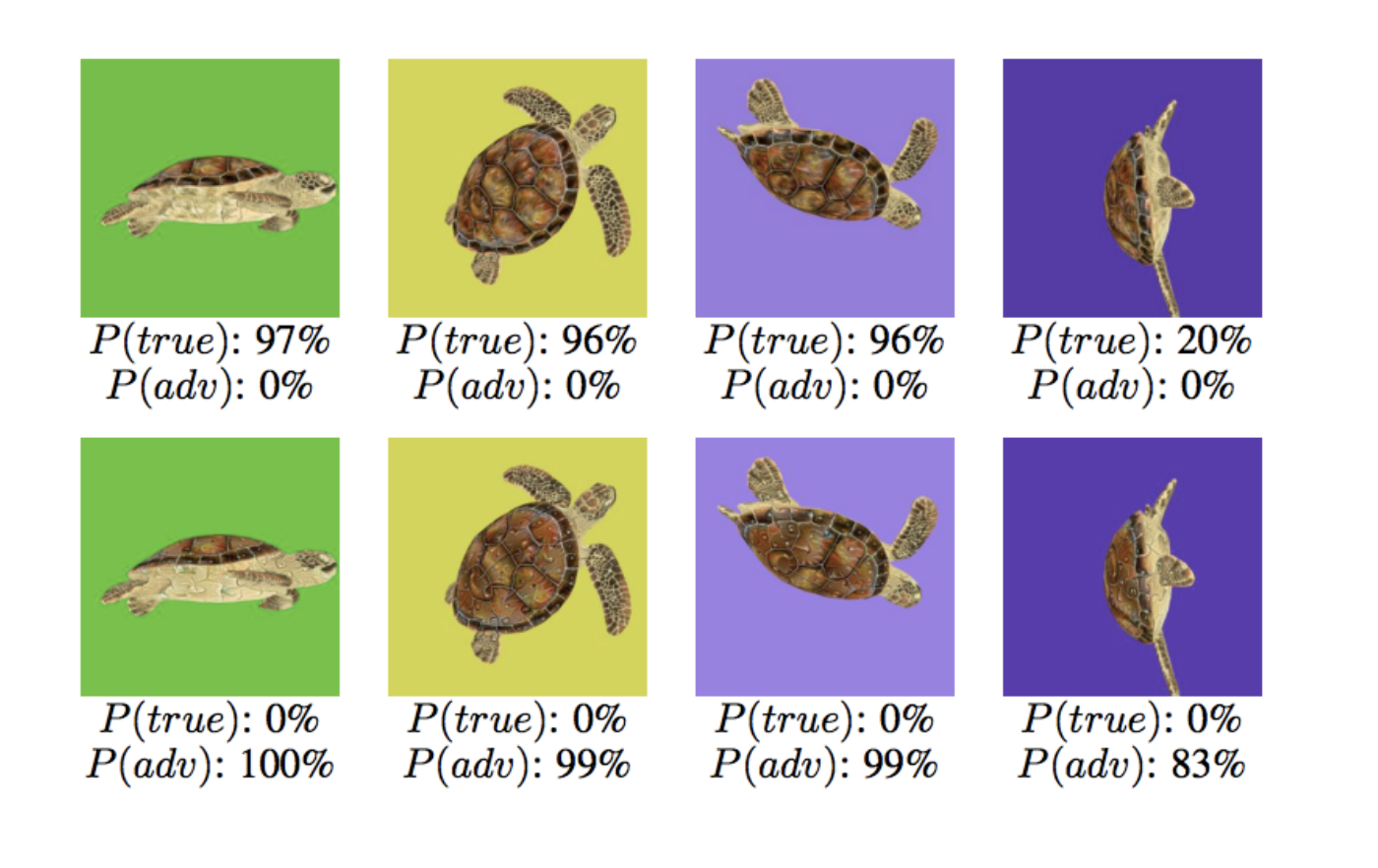

During over 1,000 hours of work over the last six weeks, the group developed a new algorithm that confuses a neural network no matter how the AI looks at it. The main example they chose was a 3D printed turtle. Unperturbed, the AI recognizes it as a turtle. But with a few slight changes to the turtle’s coloring—keeping the shape exactly the same—they were able to make the computer think it was looking at a rifle (or any random object of their choosing) no matter the angle.

The ability to fool machines with adversarial examples has been an open problem since its discovery in 2013, Athalye said, but because no one takes it seriously as a real-world issue, there’s little research effort put toward answering how to prevent or fix it. Until now, adversarial images fell apart when they were printed out and reproduced, because the textures and pixel colorings shifted slightly.

Machines have demonstrated high levels of accuracy in categorization for years: In 2015, Microsoft programmed the first computer to beat a human at image recognition. Now, self-driving automobile makers are using similar machine learning algorithms in autonomous vehicles, and researchers are racing to disrupt tedious security tasks like airport TSA scans with machine vision.

“More and more real world systems are going to start using these technologies,” Athalye said. “We need to understand what’s going on with them, understand their failure modes, and make them robust against any kinds of attack.”

Get six of our favorite Motherboard stories every day by signing up for our newsletter .

https://motherboard.vice.com/en_us/rss