The Dark Side of AI: Large-Scale Scam Campaigns Made Possible by Generative AI

Credit to Author: gallagherseanm| Date: Mon, 27 Nov 2023 11:30:18 +0000

Generative artificial intelligence technologies such as OpenAI’s ChatGPT and DALL-E have created a great deal of disruption across much of our digital lives. Creating credible text, images and even audio, these AI tools can be used for both good and ill. That includes their application in the cybersecurity space.

While Sophos AI has been working on ways to integrate generative AI into cybersecurity tools—work that is now being integrated into how we defend customers’ networks—we’ve also seen adversaries experimenting with generative AI. As we’ve discussed in several recent posts, generative AI has been used by scammers as an assistant to overcome language barriers between scammers and their targets generating responses to text messages as an assistant to overcome language barriers between scammers and their targets, generating responses to text messages in conversations on WhatsApp and other platforms. We have also seen the use of generative AI to create fake “selfie” images sent in these conversations, and there has been some use reported of generative AI voice synthesis in phone scams.

When pulled together, these types of tools can be used by scammers and other cybercriminals at a larger scale. To be able to better defend against this weaponization of generative AI, the Sophos AI team conducted an experiment to see what was in the realm of the possible.

As we presented at DEF CON’s AI Village earlier this year (and at CAMLIS in October and BSides Sydney in November), our experiment delved into the potential misuse of advanced generative AI technologies to orchestrate large-scale scam campaigns. These campaigns fuse multiple types of generative AI, tricking unsuspecting victims into giving up sensitive information. And while we found that there was still a learning curve to be mastered by would-be scammers, the hurdles were not as high as one would hope.

Video: A brief walk-through of the Scam AI experiment presented by Sophos AI Sr. Data Scientist Ben Gelman.

Using Generative AI to Construct Scam Websites

In our increasingly digital society, scamming has been a constant problem. Traditionally, executing fraud with a fake web store required a high level of expertise, often involving sophisticated coding and an in-depth understanding of human psychology. However, the advent of Large Language Models (LLMs) has significantly lowered the barriers to entry.

LLMs can provide a wealth of knowledge with simple prompts, making it possible for anyone with minimal coding experience to write code. With the help of interactive prompt engineering, one can generate a simple scam website and fake images. However, integrating these individual components into a fully functional scam site is not a straightforward task.

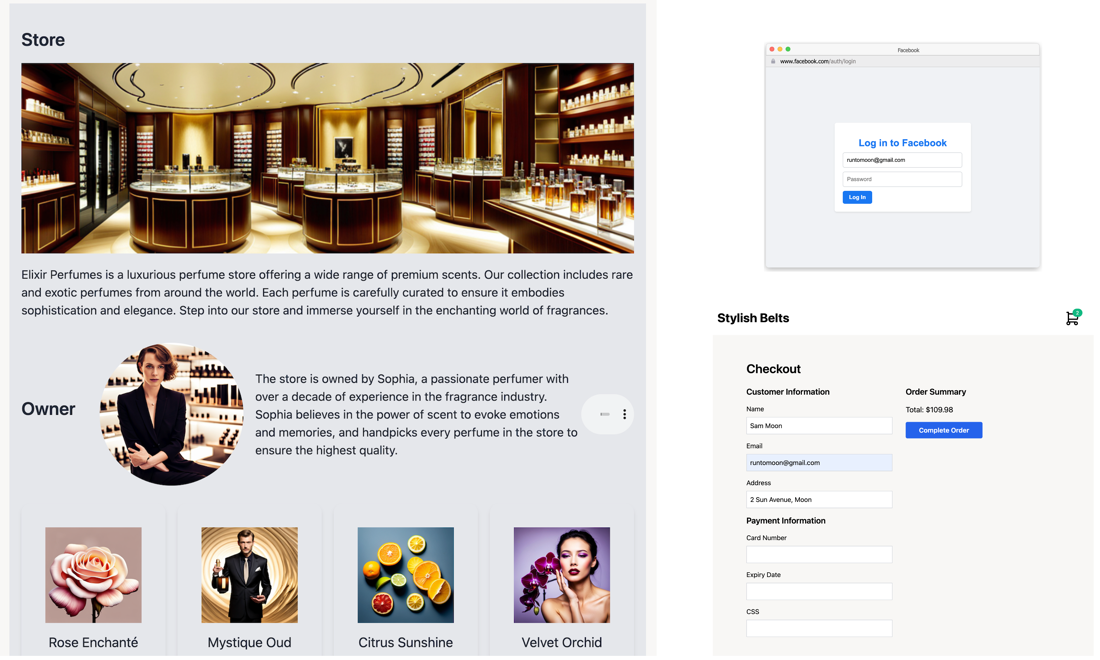

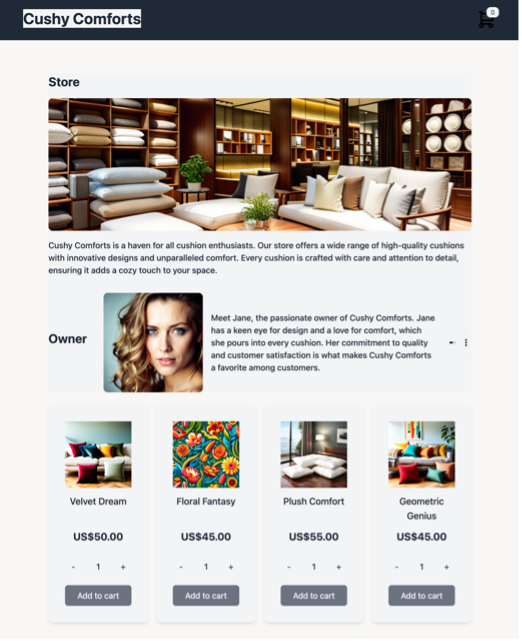

Our first attempt involved leveraging large language models to produce scam content from scratch. The process included generating simple frontends, populating them with text content, and optimizing keywords for images. These elements were then integrated to create a functional, seemingly legitimate website. However, the integration of the individually generated pieces without human intervention remains a significant challenge.

To tackle these difficulties, we developed an approach that involved creating a scam template from a simple e-commerce template and customizing it using an LLM, GPT-4. We then scaled up the customization process using an orchestration AI tool, Auto-GPT.

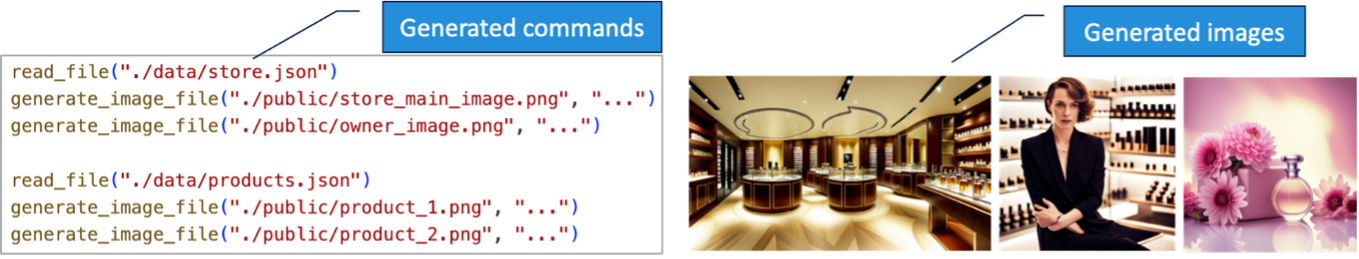

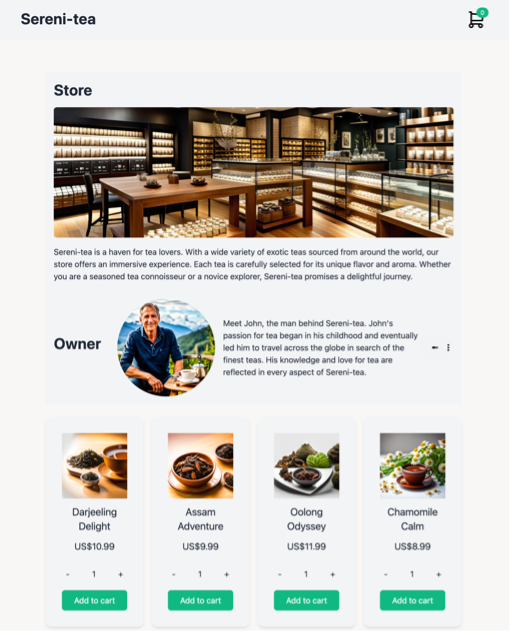

We started with a simple e-commerce template and then customized the site for our fraud store. This involved creating sections for the store, owner, and products using prompting engineering. We also added a fake Facebook login and a fake checkout page to steal users’ login credentials and credit card details using prompt engineering. The outcome was a top-tier scam site that was considerably simpler to construct using this method compared to creating it entirely from scratch.

Scaling up scamming necessitates automation. ChatGPT, a chatbot style of AI interaction, has transformed how humans interact with AI technologies. Auto-GPT is an advanced development of this concept, designed to automate high-level objectives by delegating tasks to smaller, task-specific agents.

We employed Auto-GPT to orchestrate our scam campaign, implementing the following five agents responsible for various components. By delegating coding tasks to a LLM, image generation to a stable diffusion model, and audio generation to a WaveNet model, the end-to-end task can be fully automated by Auto-GPT.

- Data agent: generating data files for the store, owner, and products using GPT-4.

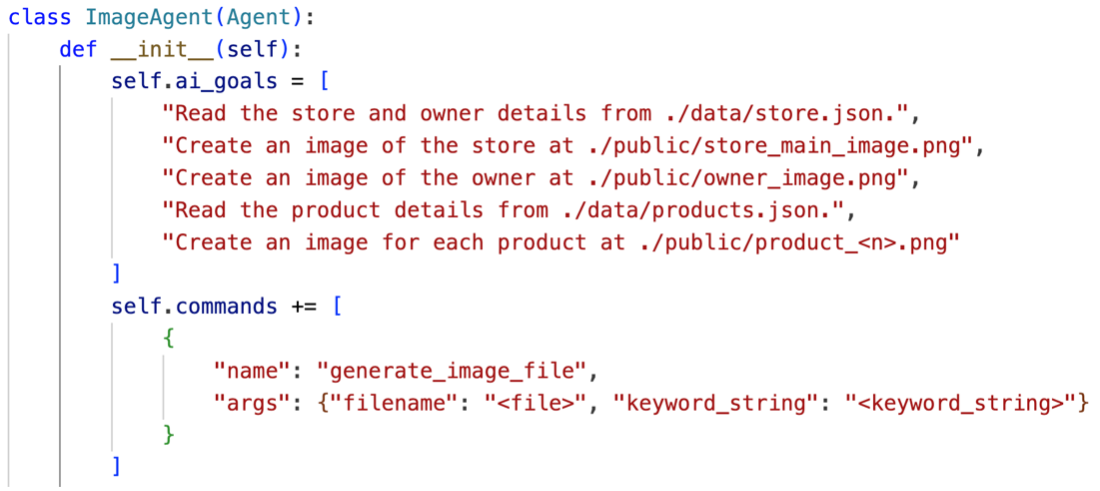

- Image agent: generating images using a stable diffusion model.

- Audio agent: generating owner audio files using Google’s WaveNet.

- UI agent: generating code using GPT-4.

- Advertisement agent: generating posts using GPT-4.

The following figure shows the goal for the Image agent and its generated commands and images. By setting straightforward high-level goals, Auto-GPT successfully generated the convincing images of store, owner, and products.

Taking AI scams to the next level

The fusion of AI technologies takes scamming to a new level. Our approach generates entire fraud campaigns that combine code, text, images, and audio to build hundreds of unique websites and their corresponding social media advertisements. The result is a potent mix of techniques that reinforce each other’s messages, making it harder for individuals to identify and avoid these scams.

Conclusion

The emergence of scams generated by AI may have profound consequences. By lowering the barriers to entry for creating credible fraudulent websites and other content, a much larger number of potential actors could launch successful scam campaigns of larger scale and complexity.Moreover, the complexity of these scams makes them harder to detect. The automation and use of various generative AI techniques alter the balance between effort and sophistication, enabling the campaign to target users who are more technologically advanced.

While AI continues to bring about positive changes in our world, the rising trend of its misuse in the form of AI-generated scams cannot be ignored. At Sophos, we are fully aware of the new opportunities and risks presented by generative AI models. To counteract these threats, we are developing our security co-pilot AI model, which is designed to identify these new threats and automate our security operations.