Algorithms Supercharged Gerrymandering. We Should Use Them to Fix it

Credit to Author: Daniel Oberhaus| Date: Tue, 03 Oct 2017 19:11:46 +0000

Today, the Supreme Court will hear oral arguments for Gill v. Whitford, in which the state of Wisconsin will argue that congressional redistricting practices are not subject to judicial oversight. At the core of this hearing is whether partisan gerrymandering—a tactic used by political parties to redraw congressional voting districts so that the voting power within those districts is weighted toward their own party—was used to steal the 2012 state elections in Wisconsin from Democrats. The ramifications of this decision will be felt by the entire country.

The Supreme Court will be deciding whether or not federal courts have the ability to throw out district maps for being too partisan, which requires the justices to be able to articulate just what constitutes partisan gerrymandering in the first place. The practice of gerrymandering has been a thorn in the side of American democracy for most of our nation’s existence, but continues largely unabated due to the difficulty of defining the point at which a new congressional district can considered to be the result of partisan gerrymandering.

Various solutions to America’s gerrymandering problem have been proposed over the years, but most of these have failed to gain traction. In September, however, a team of data scientists at the University of Illinois published a paper to little fanfare that offered a novel solution to America’s gerrymandering woes: Let an algorithm draw the maps.

Although the Illinois researchers, led by computer science professor Sheldon Jacobson, aren’t the first to propose using artificial intelligence as a solution to the redistricting process, they hope their new approach will be more accessible and fair than previous attempts at stopping gerrymandering with computation.

These computer scientists are motivated by the belief that data and algorithms will create transparency in the notoriously opaque redistricting process by exposing the inputs and parameters that led to redrawing a district a certain way. With these inputs and parameters exposed, data scientists hope this will hopefully incentivize a more equitable redistricting process. Yet they are also the first to acknowledge that the same algorithms that can create equity in congressional redistricting can also be used to gerrymander with unprecedented efficiency.

“If a group of politicians in a particular state wants to gerrymander the state to favor them, we can incorporate that into our algorithm and come up with districts which will satisfy their political agenda,” Jacobson told me over the phone. “We’re not political scientists trying to create an agenda. We’re trying to create a tool of transparency.”

Algorithms are already widely deployed in the service of partisan gerrymandering and are increasingly used as tools to keep gerrymandering in check. Yet for all their pretension to fairness, it’s far from certain that algorithms are actually creating more equity in the electoral process. This raises a profoundly important question for the future of the American experiment in democracy: If algorithms have been used for the last few decades to fuel gerrymandering, can they also offer a more equitable way forward?

A Brief History of Gerrymandering

Every decade, the United States carries out a population census, which among other things, determines how many seats in the House of Representatives are allotted to each state. It is then up to the states to draw up congressional districts, each of which elects a representative of that district to the House. The way the boundaries of these districts are decided varies from state to state, but a few elements pertain to almost every redistricting decision: all parts of a district must be geographically connected and districts ought to follow boundaries for cities, towns or counties. Beyond these common elements, redistricting requirements and processes can vary significantly from state to state. In most cases, the state legislature controls the redistricting process, which means that the party with control over the legislature has the ability to gerrymander, or redraw congressional districts in the interests of that party.

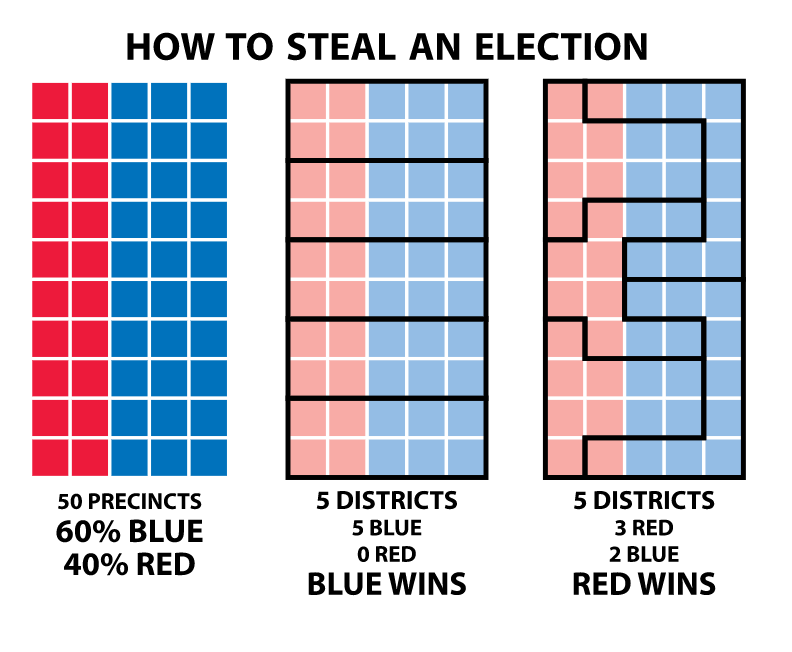

To get a better idea of how this practice can drastically affect elections, imagine a state that has 50 constituents. Of these constituents, let’s say 30 vote Democrat and 20 vote Republican, and the state has five seats in the House, like in the drawing on the left below:

In an ideal system the five congressional districts would be perfectly divided and the constituents of each district all shared the same political affiliations. In other words, the Democrats would get three congressional districts and the Republicans would get two by making each vertical column its own district. But what if Democrats controlled the redistricting process? By dividing the constituencies horizontally, they could ensure that their constituencies are the majority in each district and win all five seats in House. Alternatively, if the Republicans were in control of the legislature, they could redistrict the state so that it has the convoluted boundaries seen in the figure on the far right. Under this plan, three districts are majority Republican and the party would represent a majority of the state in terms of voting power in the House.

Of course in real life the distribution of voters in a state is not so neat, but the general idea behind gerrymandering—and its consequences—remains the same.

According to David Daley, the editor in chief of Salon and author of Ratfucked: The True Story Behind the Secret Plan to Steal America’s Democracy, gerrymandering is also a major source of political stasis in the country. Consider the 2016 election, when 400 of 435 House races were scored as “safe” for Democrat and Republican incumbents as a result of gerrymandering. After the election, only 5 seats were transferred to the Democrats from Republicans, and only ten incumbents total lost their seats. Not exactly what you’d expect at a time when the approval rating for Congress is consistently below 20 percent.

“Gerrymandering drains away competitive districts,” Daley told me in an email. “Uncompetitive districts have made the government responsive only to the extremes. They are a key reason why we can’t get action even on issues where most Americans agree. When we allow partisans to hijack district lines, we give them the power to hijack democracy itself.”

Historically, gerrymandering has been disproportionately wielded by GOP candidates to gain influence, but now some establishment Republicans such as Senator John McCain and Ohio Gov. John Kasich are starting to break ranks over the issue. The main problem is determining a fair way to draw districts and on what grounds districts can be challenged as the illegitimate products of gerrymandering.

A solution adopted by Arizona, Washington, and California was to establish standing non-partisan committees to preside over congressional redistricting following the 2010 census. This is a method of addressing gerrymandering that has been adopted in the UK and other commonwealth countries such as Australia and Canada, but non-partisan committee have been slow to catch on in the United States and aren’t necessarily as nonpartisan as they seem, according to Daley.

“Independent commissions are only as good as the criteria they are given to draw districts with, and the people who staff them,” Daley said. “Most states fill these commissions with partisans and political appointees. They end up being incumbent protection rackets, or in the case of Arizona, pushing an already secretive process deeper into the shadows.”

The Algorithmic Turn

The idea to apply computers to the redistricting process as a way to foster transparency and equity was first considered over half-a-century ago in 1961, and in 1965 an algorithm had been described that offered “simplified bipartisan computer redistricting.”

By the time the 1990 census rolled around, algorithms had become the norm for legislators and special interest groups hoping to get a leg up during the redistricting process, although fears about the effects of computers on redistricting processes were already being felt in the early 1980s. A 1981 article in the Christian Science Monitor captured the spirit of the times, noting that “even those states that have taken the redistricting process either entirely or substantially out of the hands of state lawmakers are hesitant to step aside and let an impersonal, high-speed machine take over.”

The panic, it seems, was short lived. In 1989, The New York Times reported that “a profusion of new computer technology will make it possible for any political hack to be a political hacker.” The article describes how at least 10 vendors were developing computer programs at the time that could be used by “politicians, their aides, and special interest groups as well as official redistricting commissions…to produce their own detailed versions of proposed election districts.”

For the last thirty years, an ever-growing suite of digital redistricting tools have become available to those with the technical knowledge and funds to wield this proprietary software. Although these algorithms were able to generate dozens of sophisticated district maps in a fraction of the time it would’ve taken a human to draw just one, they did little to actually solve America’s gerrymandering problem. Instead, these algorithms simply made it easier for politicians to create maps that served the interest of their party.

Are you a politician that needs to create district maps that favor Republicans in Wisconsin? No, problem—the algorithm’s got you covered.

The Algorithms Strike Back

In 2016, Wendy Cho and her colleagues at the University of Illinois Urbana-Champaign (a different team than the one led by Jacobson) developed a complex algorithm that uses a supercomputer to generate hundreds of millions of possible district configurations in a matter of hours. The tool was designed to test an enticing idea: what if it were possible to generate every possible district map for a given state, and then use this set of all possible maps to judge the level of partisanship on an officially proposed district map? This tool would essentially be able to quantify the fairness of a given map against the set of every possible map. The only problem is that generating a set of all possible maps is, practically speaking, impossible due to the astronomically large number of potential maps.

Yet as Cho and her colleagues discovered, generating all possible maps isn’t necessary to achieve their intended goal. Rather, their algorithm whittled the number of necessary maps down to a much more manageable number: about 800 million per state. By using these hundreds of millions of maps as a point of comparison, Cho and her colleagues could statistically expose bias in redistricting procedures.

The downside is that these sorts of technologies are too inaccessible and expensive for general public use because it requires a supercomputer to run. Furthermore, Cho’s algorithm is better deployed after the fact to determine the degree that a given district map is the result of partisan gerrymandering. If algorithms stand any chance of saving America’s democracy from gerrymandering, the use of the algorithms and the code they use will also have to be accessible to all.

In this regard, the past decade has been something of a renaissance era for algorithmic redistricting. Take, for example, Bdistricting or Auto-Redistrict, two free open source programs that can be used to generate ostensibly fair, unbiased congressional districts without requiring a supercomputer. There are subtle differences between the types of algorithms these programs use to generate district maps, but both are characterized as local search metaheuristics. This is basically a fancy way of saying that the algorithms manipulate tiny elements within a given domain over thousands of iterations until they arrive at an optimal result.

“A computer can’t decide the criteria for creating a district, but it can create districts based on these criteria better than any human could do.”

In the case of gerrymandering, the tiny elements being manipulated by local search algorithms are census blocks, the smallest areas of a given state for which census data (such as population size) is available. There are over 11 million census blocks in the US, and each state has at least 24,000 census blocks. These algorithms basically switch the positions of these census blocks one at a time according to certain parameters (the census blocks for a given district must be contiguous, for example, or should contain the same population sizes) until an optimal solution has been reached.

The new algorithm released last month by the Jacobson and his colleagues at the University of Illinois improves on these local search algorithms by adding a structural element called a geo-graph. Whereas other redistricting algorithms, such as Bdistricting or Auto-Redistrict start by essentially randomly generating districts and then tweaking them over thousands of iterations, Jacobson’s algorithm first analyzes the data in census blocks, which might be imagined as spread out on a graph before the computer, and then uses this data to create congressional districts optimized for certain goals.

“Both Bdistricing and Auto-Redistrict appear to be great tools, incorporate a variety of objectives, and can create fair districts,” Jacobson told me in an email. “But geo-graphs make each local search iteration step highly efficient. This means that what may take them hours, we can do in minutes on a laptop.”

The problem, however, is that although the algorithms themselves are entirely apolitical—their only goal is to find the optimal solution to a problem given a certain data set and parameters—the process of determining what parameters should be fed to the algorithm is still hopelessly mired in the all too human world of politics.

“Although the criteria for districts is a political issue, creating these districts is not,” Jacobson told me. “A computer can’t decide the criteria for creating a district, but it can create districts based on these criteria better than any human could do. Ultimately, this is an algorithmic data problem.”

Who Programs the Programmers?

On the surface, it seems that defining parameters that automatically lead to fair districts would be relatively straightforward. Bdistricting, for instance, generated district maps that were “optimized for equal population and compactness only.” The idea here is that these two criteria are free from “partisan power plays” since they’re based on metrics that have nothing to do with politics. But as the San Francisco-based programmer Chris Fedor labored to show, unbiased districts don’t necessarily result in fair districts. As Fedor detailed in a Medium post, algorithmically optimizing district boundaries for compactness (such as Bdistricting) or randomly generating the boundaries actually leads to districts that are more gerrymandered than the boundaries drafted up by politicians.

“Algorithms can hide as much as they reveal,” Micah Altman, a professor of computer science at MIT, told me in an email. “Technical choices in an algorithm, how one formalizes criteria, and software implementation can have tremendous implications, but are much more difficult for non-experts to review than comparison of maps and their consequences.”

This point is further reinforced in a 2010 paper in the Duke Journal of Constitutional Law and Public Policy by Altman and the University of Florida political scientist Michael McDonald, which analyzed several trends in the application of digital technologies to the redistricting process. In the case of Bdistricting and Auto-Redistrict, these programs belong to a class of algorithms that seek to automate the redistricting process entirely. Yet as the authors of the paper point out, these types of algorithms run up against some fundamental limits. Creating optimally compact, contiguous, and equal-population districts belong to a class of math-problems described as “NP-hard,” which basically means that there isn’t an algorithm that can solve the problem both quickly and correctly in all possible cases.

A way to get around the NP-hardness of redistricting is to approach the problem heuristically, which is a fancy way of saying that a computer can solve the problem, but not necessarily in the most optimal or perfect way. As the Altman and McDonald describe them, these heuristic algorithms are “problem-solving procedures that, while they may yield acceptable results in practice, provide no guarantees of yielding good solutions in general. Specifically, an algorithm is heuristic if it cannot be shown to yield a correct result, or correct within a known error or approximation or having a known probability of correctness.” Every attempt at automated redistricting—including all of the algorithms mentioned above—have relied on heuristics.

As Altman and McDonald acknowledge, the computational limits of the redistricting problem could be addressed if the non-partisan criteria for redistricting was greatly simplified and ranked (for instance, equal populations are more important than compactness, contiguity more important than the rest, etc.). Yet so far, anything close to a standard for measuring fair districts is lacking and this very issue has been at the heart of landmark Supreme Court cases considering gerrymandering.

The Gill v. Whitford case being heard at the Supreme Court Tuesday is a challenge to the 1986 Supreme Court ruling in Davis v. Bandemer that claimed partisan gerrymandering is able to be subjected to judicial oversight, although the court was divided on just what constituted judicial standards in challenges to partisan gerrymandering.

“Algorithms are a terrific tool and there could definitely be a role for them, but they are only as good as the criteria that govern them.”

As Justice Byron White wrote in his opinion on the Bandemer case, a “group’s electoral power is not unconstitutionally diminished by the fact that an apportionment scheme makes winning elections more difficult, and a failure of proportional representation alone does not constitute discrimination.” According to White, discrimination in gerrymandering “only occurs when the electoral system is arranged in a manner that will consistently degrade a voter’s or a group of voters’ influence on the political system as a whole.”

The inability of the Supreme Court to decide on standards with which to judge cases challenging partisan gerrymandering was the justification in a 2004 Supreme Court case in which a plurality of justices ruled that challenges to partisan gerrymandering are not subject to court oversight since no general standards for judicially challenging partisan gerrymandering exist. Yet the Supreme Court ultimately didn’t overturn the ruling from the 1986 Bandemer case that determined partisan gerrymandering is subject to judicial oversight since such standards may emerge in the future.

Automated redistricting algorithms basically face the same problem articulated by the Supreme Court ruling in 2004—there continues to be no way to measure at what point a district has been “gerrymandered,” and there are no hard rules for what a “fair district” is. (Although there are protections against gerrymandering on racial grounds). Merely creating districts randomly or optimized for unbiased parameters (such as compactness or equal-population) is no guarantee that those districts are fair. In absence of simple standards that would make automated redistricting truly feasible, Altman and McDonald suggest another approach to the redistricting problem: using algorithms to foster transparency.

Bridging the Analog/Digital Divide

Following the establishment of standing nonpartisan redistricting commissions, Arizona, Washington, and California are now the only three states that are required to solicit public input on redistricting decisions. In these cases, a lightweight machine learning algorithm like the one developed by Jacobson and his colleagues could become an invaluable resource that allows voters to make informed decisions about the redistricting plans. Even in states where the redistricting process is entirely in the hands of legislators, algorithmic tools would empower the public to keep their legislators in check by exposing the assumptions that went into the creation of congressional districts.

“Algorithms are a terrific tool and there could definitely be a role for them,” Daley said. “But like independent commissions, they are only as good as the criteria that govern them. The meaningful structural reform that we need involves the way we think about voting and districting itself.”

Still, the combination of algorithms and independent redistricting commissions may offer a way forward, according to Altman and McDonald. As they argued in an article for Scholars Strategy Network, the internet, coupled with sophisticated and accessible redistricting software, can allow for mass participation in the redistricting process. If this public participation is facilitated by a truly independent commission, which makes all the data, plans and analyses transparent and the tools used to arrive at districts available to the public so that they can compare and comment on these plans, it may be possible to finally create equitable districts.

The plan sounds utopian, but Altman and McDonald have put their principles to work. In 2010, they released an open source software called districtbuilder that enabled broad public participation in the redistricting process by allowing anyone to create, edit, and analyze district plans in a collaborative online environment. While this may sound like it would be reserved for computer nerds with technical know-how, Altman told me it was so simple that they were able to teach elementary school children how to create legal district plans with the tool.

“Algorithmic redistricting, along with software that enables the public to participate in examining, evaluating and contributing to plans, when implemented thoughtfully and transparently, can be useful as a method of assisting human to argue for and to implement their representational goals,” Altman said. “However, most proposed algorithmic solutions, such as maximizing some basic notions of geographic compactness, at best embed a set of arbitrary and poorly understood biases into the system, and at worst are cover for attempts to implement political outcomes by proxy.”

At the core of the anti-gerrymandering crusade is the notion that a constituency should be represented in accordance with their beliefs, instead of being subjected to the whims of politicians vying to entrench their party’s power. That our elected representatives are beholden to their constituencies, and not vice versa, is a fundamental tenet of our democracy.

Algorithms are not a silver bullet for our nation’s gerrymandering problem, but as Altman has labored to show, they can be effectively incorporated into the political apparatus to make the process of redistricting more transparent and fair. But algorithms can only do so much, and their biggest limitation right now is a lack of quantifiable criteria for what constitutes fair districts. Determining these criteria is a totally political issue, and as much as we might wish for an automated solution, no amount of computing power will ever crack that problem.